Creating a DataPlatform with Azure.

A client of Basetis needed a batch to process millions of rows per day. The idea was to create a Data Platform that would do this batch and would also allow Data Scientist to work with the data.

An all the platform should be integrated with DevOps.

40 million rows processed per day

8 Data Scientist / Analysts using the platform

More than 5 clusters (20 servers) working

I did this project while working with a client of Basetis.

The aim of this project was to create a Data Platform using Microsoft Azure. As a part of the dataplatform I was asked to create and ETL to process millions of rows of data per each day.

This dataplatform would also allow Data Scientist (DS) and Data Analysts (DA) to work with the data of the project. I was also asked to provide a package that would allow both DS and DA to easily interact with that data.

On top of that all should be integrated with Azure DevOps and there should be 2 environments one for production on the other for pre-production.

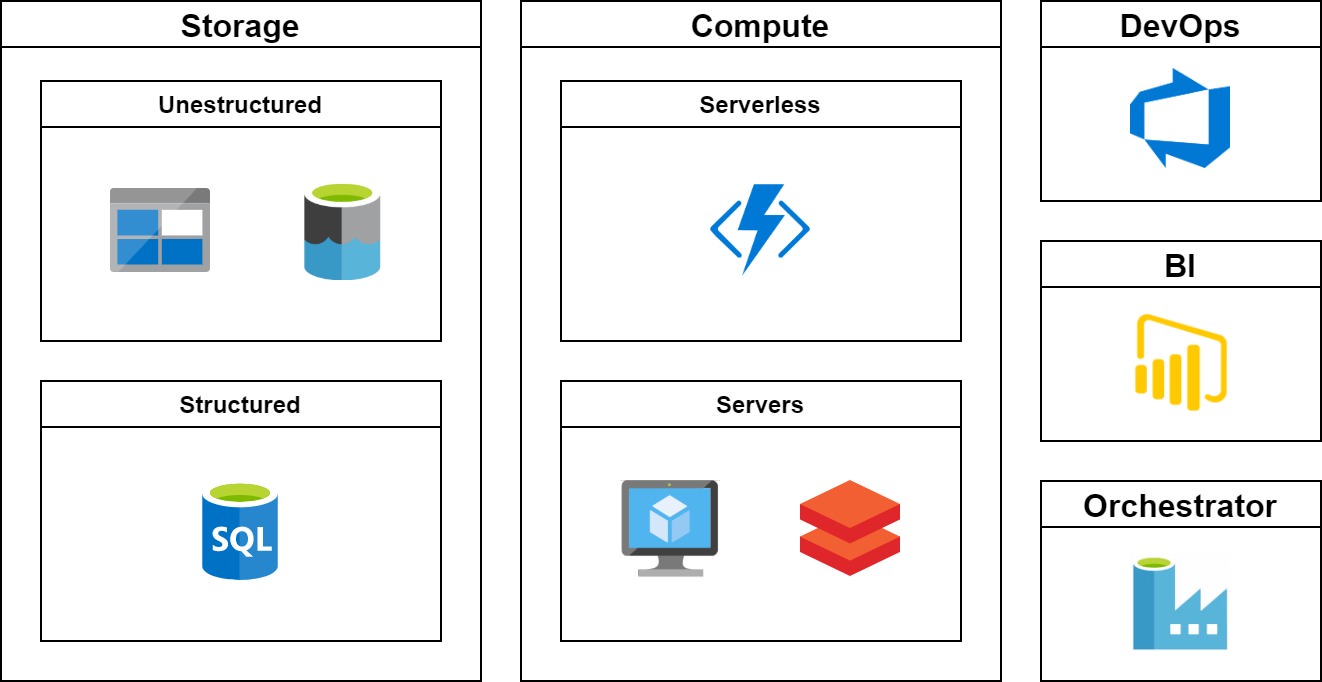

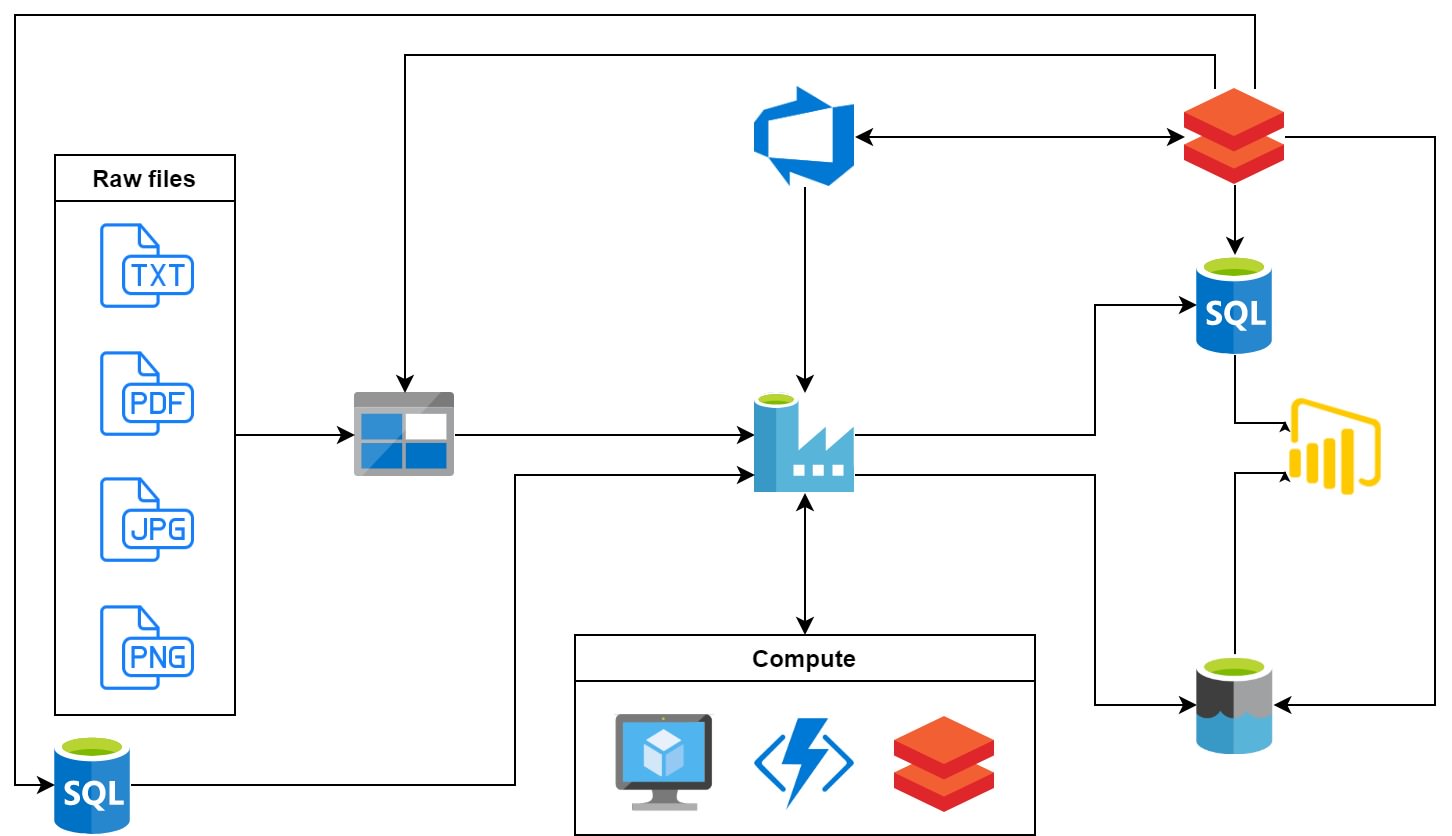

Each day some files would be needed to process and they should be transformed using some data of a foreign SQL database. In order to recive those files and process them we used some blobs storages.

Then a datalake was created for storing the processed data as well as for the DS and DA usage.

Finally there was another SQL for storing some results and for connecting Power BI.

In order to orchestrate all jobs we used Azure Data Factory (ADF) and databricks as the compute engine. We also set databricks as the default tool to interact with data since it allowed everybody to work with spark.

Finally we used Azure DevOps to integrate all of that.

Since there was a PRO and a PRE environments all of the above was cloned and automated using Azure DevOps.

The batch itself needed to process around 40 million rows per day so we decided to use spark. In this case it was easy to set it up because we used the databricks platform that integrates smoothly with Azure.